AI in Software Development

In the ever-evolving digital landscape, artificial intelligence (AI) and machine learning (ML) stand out as transformative forces reshaping software development. As innovation takes center stage, an expanding toolkit of AI components and models play a pivotal role in driving this transformation.

Our exploration into the impact of AI and ML on software development draws from our research and survey of more than 800 developers (DevOps) and application security (SecOps) professionals. These insights reveal a broad adoption trend, with 97% currently incorporating generative AI in their workflows to some degree.

While the influence and intricacies of these tools offer immense opportunities, they also present challenges, including concerns for security and impact on jobs. Adding to these, and of particular interest to open source software and software supply chains, the consumption of AI and ML libraries has seen incredible rates of adoption. Unfortunately, additional concerns are present for teams including unplanned costs and increased liability.

This section covers two distinct but interrelated questions that have emerged on this topic: What role do AI and ML play in assisting developers, and what are the challenges that AI practitioners face in developing AI products? We explore both of those questions here.

Sonatype's survey: Risks and rewards of AI

For our recent AI-specific survey, we engaged DevOps and SecOps Leads responsible for software development, coding, developer relations, application security, threat intelligence, and analysis or security operations. Our primary objective was to understand how teams were using AI in their workstreams. Our questions ranged from frequency of use to what they found beneficial and challenging. We asked about the tools they were using, which industries are facing AI-related risks, and where they see the biggest opportunities. We’ll give you a peek at some of the fascinating results below. For a more in-depth investigation, you can access the full report here.

The intersection of AI and software development

Code generation and testing

One of the most significant implications of AI in software development is its potential to generate code. Platforms like OpenAI's Codex, which powers tools like GitHub's Copilot, can assist developers by suggesting entire lines or blocks of code. Nearly half of the respondents — 47% of DevOps and 57% of SecOps — reported that by using AI, they saved more than six hours a week. Automated code suggestions mean faster development cycles.

AI tools

Among the 97% of DevOps and SecOps leaders who confirmed they currently employ AI to some degree in their workflows, most said they were using two or more tools daily. Topping the list at 86% was ChatGPT, with GitHub Copilot at 70%. Notably, GitHub Copilot caters primarily to developer teams. The capability and widespread adoption of these tools underscores the notion that "the hottest new programming language is English." Additionally, respondents also mentioned the utilization of other tools such as SCM Integrations, IDE Plugins, and Sourcegraph Cody.

Opportunity for developers at all levels

The benefits of AI for software developers are evident across all levels of the experience spectrum.

FOR SENIOR DEVELOPERS:

FOR SENIOR DEVELOPERS:

ENABLING A MOTIVATED JUNIOR

- Senior Developers can leverage AI tools like GitHub Copilot to quickly complete tedious tasks.

- ChatGPT offers the promptness of AI technology while allowing juniors to be part of the development process and work with the senior developers.

FOR JUNIORS AND SENIORS:

FOR JUNIORS AND SENIORS:

STREAMLINING WITH PRIVATE REPOSITORIES

- GitHub Copilot Chat allows developers to query the repository directly.

- These tools benefit developers working with a legacy project with little documentation, local knowledge, or when they need to learn a new code base.

- AI tools could reduce new-hire onboarding time from several months to a few weeks.

FOR JUNIORS AND SENIORS:

FOR JUNIORS AND SENIORS:

ACCELERATING WITH AN EXTENSIVE REFERENCE

- Generative AI tools serve as a practical reference book for developers of all levels.

- ChatGPT helps look up common behaviors in programming languages and give clues on how to accomplish something without knowing basic syntax.

- Generative AI reduces time spent teaching standard techniques to juniors and helps senior developers with brainstorming.

- ChatGPT can also be a valuable tool for debugging.

FOR JUNIOR DEVELOPERS:

FOR JUNIOR DEVELOPERS:

BENEFITING FROM AN AI MENTOR

- Junior developers benefit from having AI/ML tools to answer quick questions and provide insight into technical terms and jargon.

- ChatGPT saves time for senior developers by handling basic queries, allowing them to focus on more complex issues.

- Large language model (LLM) tools can help answer questions about why a particular tool or architecture might be chosen, helping juniors understand tradeoffs and potential decisions.

Navigating concern

While the prospects of generative AI in software development are undoubtedly exciting, it doesn’t come without its challenges—even if those challenges are just perceived and not realized. 61% of developers believe the technology is overhyped compared to 37% of security leads. Although the majority of respondents are utilizing AI to varying degrees, it is not necessarily driven by personal preference. A striking 75% of both groups cited feeling pressured from leadership to adopt AI technologies, recognizing their potential to bolster productivity despite security concerns.

Introducing security challenges

Three (3) out of four (4) DevOps leads have expressed concerns regarding the impact of generative AI on security vulnerabilities, especially in open source code. Additionally, more than 50% of these individuals believe that this technology will complicate threat detection. Interestingly, less than 20% of SecOps professionals shared these concerns.

Perhaps not surprisingly, 60% of large companies surveyed were more likely to be concerned about security risks compared to less than 50% for smaller organizations. The same went for the illegal use of unlicensed code, where 49% of large organizations are worried about this, compared to 41% and 35% for mid-size and small organizations, respectively.

3 of 4

DevOps Leads have expressed concerns regarding the impact of generative AI on security vulnerabilities

Tools require guidance

AI tools, particularly large language models (LLMs), offer significant assistance in various tasks, but they require considerable guidance to check their work and look for signs of bias. LLMs are not bound by fact, so they should not operate autonomously. For one, LLMs experience hallucinations or false information that require the person using the model to recognize when a mistake is made. AI is also not bound by rules or logical constraints. There is no shortage of YouTube videos where people engage in chess matches with ChatGPT. The tool's highly unconventional moves would certainly be deemed unacceptable in a legitimate game. This reinforces the concept that AI is a tool to augment human capability rather than replace it. It will require experience and knowledge to identify these mistakes when LLMs generate code. If not carefully monitored, the risk of developing technical debt, a concern of about 15% of each surveyed group, might become a reality.

Job implications

One of the most palpable fears associated with the rise of AI and ML is job displacement. 1 in 5 of the SecOps Leads in our survey noted this as their top concern. Specifically, the current shortage in cybersecurity talent forces organizations to get creative to fill that gap. AI might be one way to do that. However, the reality points to a shift in roles rather than outright replacement. The technology is creating an environment where human creativity, intuition, and strategy are more valuable than ever. As shown above, in software engineering, junior, senior and every level in between can benefit from the use of AI in their workstreams and as a learning tool.

FIGURE 6.1 NUMBER ONE CONCERN ABOUT USING GENERATIVE AI

DEVOPS LEADS

SECOPS LEADS

AI's open source toolkit: Components and models in focus

Doubling down on AI and ML: Enterprise adoption trends

The usage of AI and ML components has also experienced a remarkable surge in enterprises. Over the past year, the adoption of these tools within corporate environments has more than doubled (Figure 6.2), reflecting a significant shift in how companies approach data science and machine learning. With the introduction of advanced AI models like ChatGPT, organizations have increasingly recognized the potential for enhancing decision-making, automating tasks, and extracting valuable insights from their data. This surge underscores a growing awareness of the transformative power of AI and ML, as businesses strive to leverage these technologies to stay competitive and drive innovation in their operations.

FIGURE 6.2. NUMBER OF ENTERPRISE APPS USING AI COMPONENTS

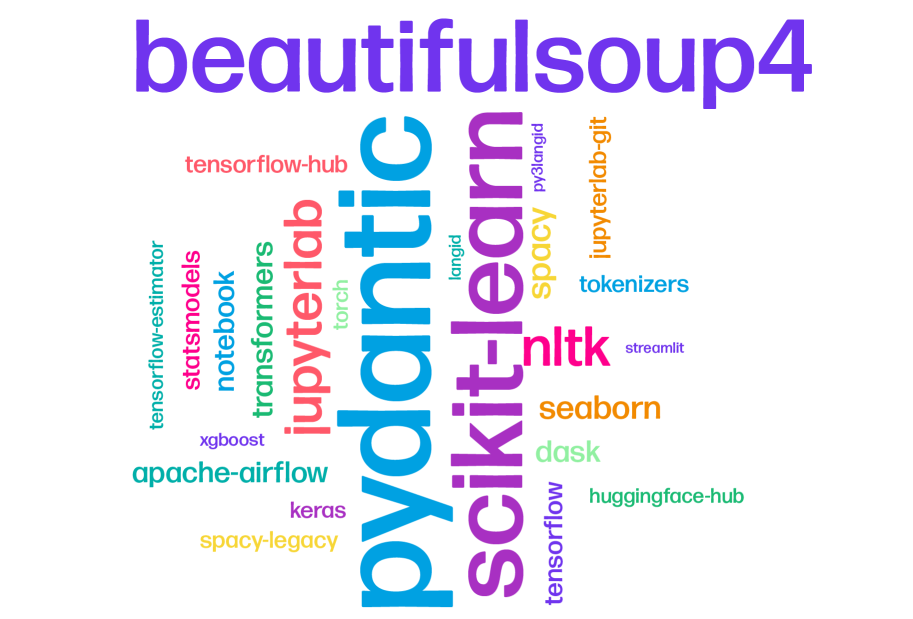

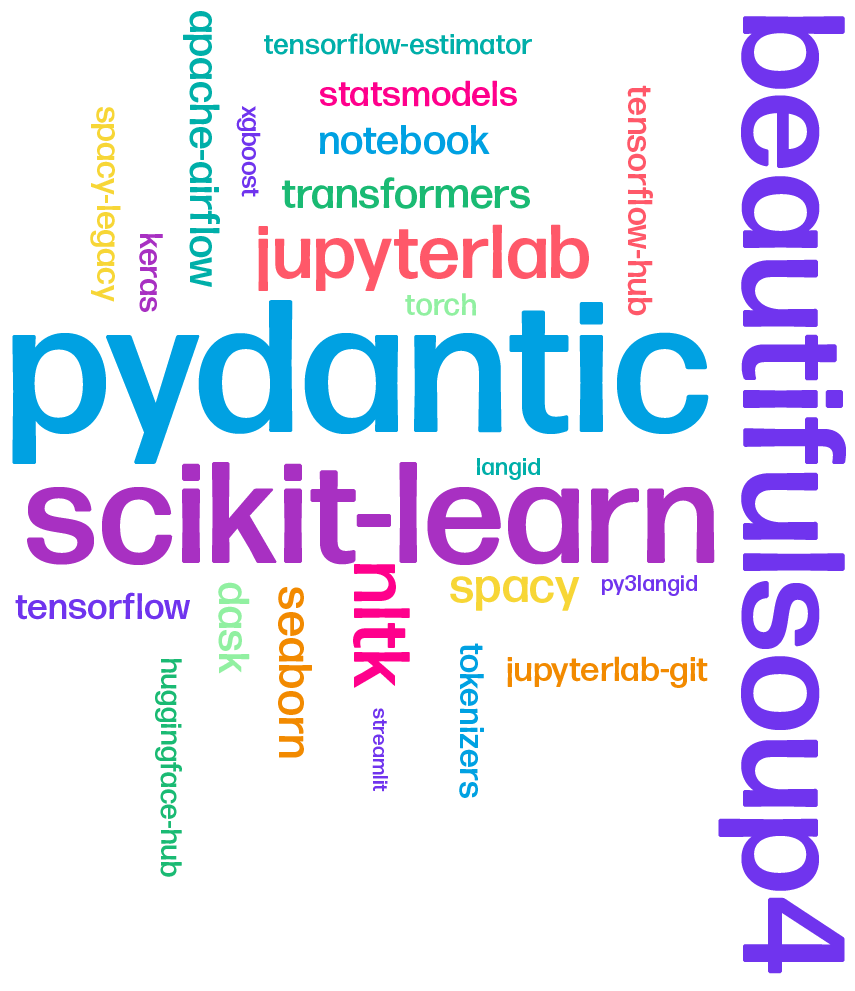

The word cloud in Figure 6.3 showcases the versatile toolkit embraced by data scientists and AI practitioners. We observed a diverse spectrum of tools and frameworks gaining prominence. These range from machine learning stalwarts like scikit-learn to the deep learning powerhouse PyTorch, with HuggingFace transformers making a noteworthy ascent, highlighting the increasing influence of transformer-based models in contemporary AI endeavors.

FIGURE 6.3. TOP 25 MOST POPULAR DATA SCIENCE COMPONENTS

FIGURE 6.3. TOP 25 MOST POPULAR DATA SCIENCE COMPONENTS.

Equally fascinating was the finding that this growth wasn't confined solely to cutting-edge generative AI components. Instead, it radiated across the spectrum, encompassing well-established frameworks such as scikit-learn, TensorFlow, and PyTorch (Figure 6.5). This diversification underscores a strategic shift among enterprises as they explore multiple avenues to extract actionable insights and value from their data. Hence, the evolving software supply chain embraces both novel LLM-focused tools and enduring traditional frameworks.

FIGURE 6.4. LANGUAGE LEARNING MODEL GROWTH

FIGURE 6.5. TRADITIONAL MACHINE LEARNING GROWTH

FIGURE 6.6. COMPONENTS WITH THE HIGHEST GROWTH RATE IN USAGE

While we have witnessed the remarkable output of these multi-billion parameter models, there remains a compelling case for interpretable machine learning. This demand is captured by the growth of components like Explainerdashboard, designed to elucidate the predictions made by machine learning models, ensuring transparency and accountability in AI-driven decision-making.

Pros and cons of LLM-as-a-service

LLMs-as-a-service offer several distinct advantages to enterprises and companies. Firstly, they accelerate development, simplifying the integration of advanced language capabilities into applications through straightforward API calls. Additionally, LLMs-as-a-service can deliver impressive performance benefits as the bulk of the processing is handled server-side, offloading computational demands from local devices.

However, there are notable drawbacks to consider. One significant concern is cost, as enterprises typically pay for each token sent and received, which can accumulate quickly with extensive usage. Data privacy and security are also paramount concerns, as enterprises may lack full transparency into how their data is being utilized by the service provider, raising potential privacy issues. Moreover, vendor lock-in poses a substantial risk, as reliance on a particular service leaves applications vulnerable to vendor outages, deprecated features, or unforeseen changes in model performance that may not align with the specific task at hand. Balancing these pros and cons is essential when evaluating the integration of LLMs-as-a-service into an enterprise's workflow. As more companies turn towards open source AI solutions, they encounter familiar challenges related to the consumption of these open source components which, in this context, are the AI models themselves.

Data scientist burden

Data scientists and engineers tasked with deploying open source LLMs shoulder a substantial burden, encompassing numerous critical decisions:

MODEL SELECTION

With a vast repository of over 337,237 models available, they must carefully select the most suitable one for their specific application.

VERSION SELECTION

Deciding which version of the model to use — be it chat-oriented, instruction-tuned, or code-tuned — requires careful consideration to align with the project's objectives.

PARAMETER SIZE

Choosing from a spectrum ranging from hundreds of millions to hundreds of billions of parameters, they must determine the ideal model size to balance computational requirements and performance.

EMBEDDINGS

The selection of embeddings plays a crucial role in fine-tuning the model's performance for specific tasks.

CONTEXT WINDOW

Defining the appropriate context window, representing the number of tokens a model can take as input, is essential to optimize the model's responsiveness.

LICENSING AND SECURITY

While navigating these choices, data scientists must remain vigilant about the licensing and security implications. They must verify the model's release license, ensuring it aligns with their intended usage, and be cautious of any potential licensing conflicts. Additionally, they must assess security risks, considering the possibility of uncensored or potentially inflammatory responses from the model and the implications of deploying versions that can be jailbroken.

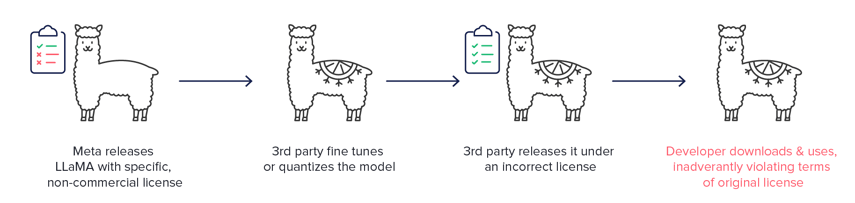

Licensing risk

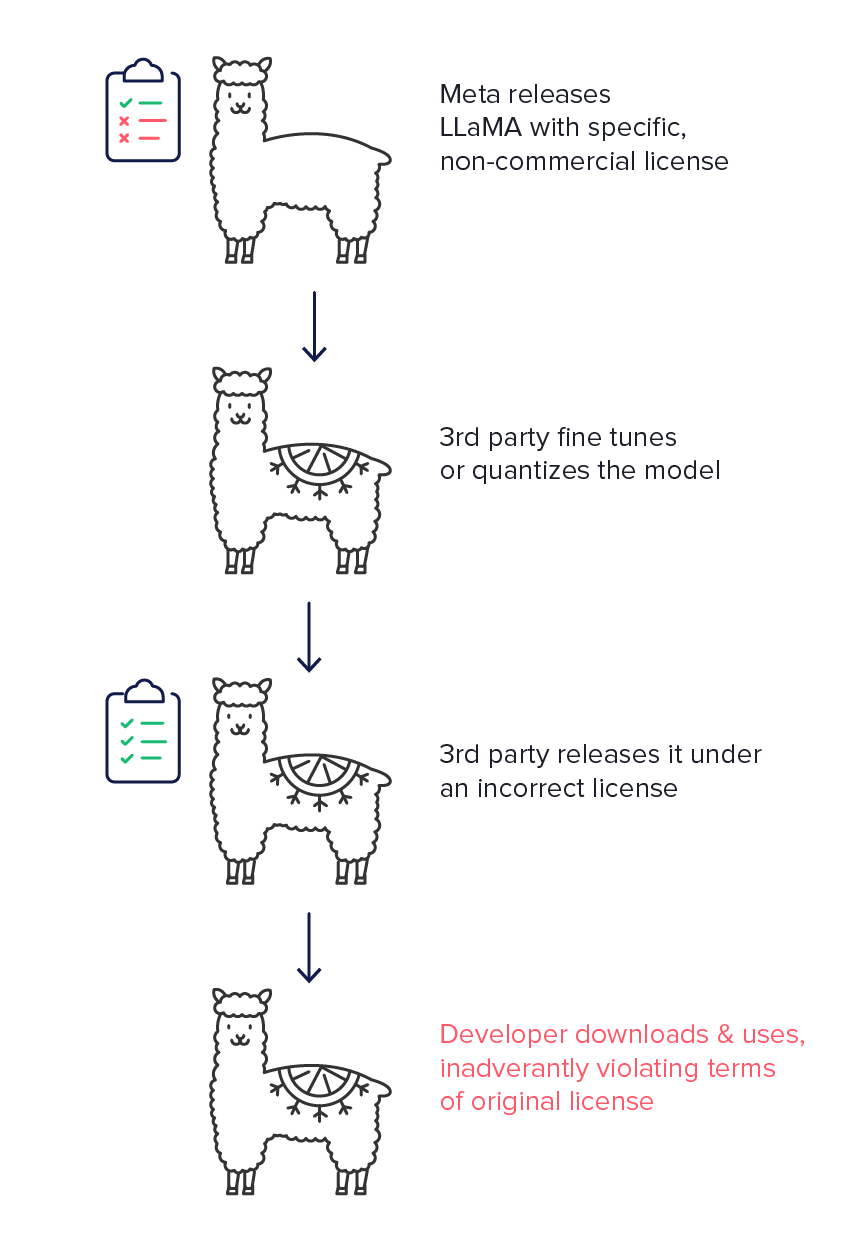

Deploying open source LLMs presents significant opportunities for natural language interaction with company products and knowledge bases. However, it is imperative to recognize the potential licensing risks associated with these models. In many cases, developers may fine-tune these models to suit specific applications, but the licensing terms of the foundational model must be carefully considered. For instance, if a foundational model, such as Meta's LLaMA, is released under a non-permissive license that restricts commercial use or imposes other conditions, deploying a fine-tuned version with an incorrect license can inadvertently violate those terms. This situation can lead to legal liabilities and intellectual property disputes, even if the fine-tuned model itself is released with a different license. It underscores the importance of due diligence in understanding and adhering to the licensing terms of both the foundational and derived models to avoid unintended legal consequences. Furthermore, it necessitates model fingerprinting for lineage tracking to avoid these consequences.

FIGURE 6.7. NAVIGATING LICENSING RISKS IN THE DEPLOYMENT OF OPEN SOURCE LANGUAGE MODELS

FIGURE 6.7. NAVIGATING LICENSING RISKS IN THE DEPLOYMENT OF OPEN SOURCE LANGUAGE MODELS

It is clear that in this instance, technological innovation is ahead of legislation. As evidenced by the many court battles between generative AI firms and content creators. Inevitably some of this stems from a misunderstanding of the technology. In contrast, there are also some egregious examples of the output of generative AI being a lot more indicative of memorization compared to generalization, such as when artists' ghostly signatures appear in AI generated artworks.

The copyright issues around the training sets and outputs of generative AI aren't going away anytime soon. While the legal, and sometimes even philosophical, debates get resolved, some companies have taken proactive steps, offering legal protection for AI copyright infringement challenges to customers using products such as Microsoft's Copilot. Overall, the devil is in the details, and the legal challenges are likely to help democratize the AI landscape as companies will have to become more transparent about the training datasets, model architectures, and the checks and balances in place designed to safeguard intellectual property.

In light of the potential risks associated with copyright infringement in generative AI, it is imperative for enterprises to adopt a proactive approach. Over-reliance on a single LLM-as-a-service, or single foundational model, such as LLaMA, may prove detrimental in the face of regulatory challenges. Therefore, it is prudent to explore viable alternatives to mitigate potential consequences. In the event of a hypothetical scenario wherein copyright infringement is attributed to the training data of models like LLaMA, the critical question arises: Can you ensure that your applications remain compliant and unaffected by their utilization of LLaMA-derived models? Preparing for such contingencies is essential in a world where every company is becoming a data and AI company.

Conclusion: A collaborative future

The rapid evolution and integration of AI, especially LLM tools such as GitHub's Copilot and ChatGPT, as well as their open source alternatives, have brought about a significant shift in software development. Our analysis and surveys highlight this growth as a critical milestone in our technological era. These advancements offer transformative benefits, including increased productivity, advanced language understanding, and diversified AI components in engineering workstreams. However, along with these advantages, there are also challenges to be addressed.

As we move forward, it is essential to ensure a balanced and responsible coexistence between AI tools and their human counterparts. A critical aspect of this task extends beyond tool assessment and involves crafting a governance strategy that assesses the impact of adopting both proprietary and open source libraries and models on your software supply chain. By navigating the potential pitfalls and maximizing the profound opportunities that AI presents, we can shape a future that optimizes the benefits of this technology.